Thursday, December 30, 2010

30 December 2010

Wednesday, December 29, 2010

29 December 2010

Tuesday, December 28, 2010

28 December 2010

Monday, December 27, 2010

27 December 2010

Went back to 3DS Max today to create some 3D scenes for us to use in testing. Got that part up pretty quickly. All I had to do was to recall the necessary steps required to create the scene and export them into POD files. I was supposed to work on implementing labels to label the different nodes in the models for our application. However, I found out that the translation was not working for the iPad.

Hence, I went back to fix up the pan gesture for translation. Things were working well for the iPhone application, but on the iPad, the translation was a little off. Spent some more time studying the sample application, and I realized that there was this method to do the conversion view points to the eaglLayer points. The iPad view's size was larger than that of the eaglLayer that we created, hence, I figured that this would solve the problem.

Got this implemented and the translation became more accurate. There was no need for conversion in the iPhone application as the view's size is the same as the eaglLayer's size. It still was not perfect though. The translation for the heart models worked well, but not for the cylinders. I really wonder why...

Friday, December 24, 2010

24 December 2010

Thursday, December 23, 2010

23 December 2010

Wednesday, December 22, 2010

22 December 2010

Tuesday, December 21, 2010

21 December 2010

Monday, December 20, 2010

20 December 2010

Thursday, December 16, 2010

16 December 2010

Wednesday, December 15, 2010

15 December 2010

Tuesday, December 14, 2010

14 December 2010

Monday, December 13, 2010

13 December 2010

Saturday, December 11, 2010

06 December 2010 - 10 December 2010

Sunday, December 5, 2010

29 November 2010 - 03 December 2010

I worked on creating the animations for the 3D models for the most of this week. First, I worked with Blender. Got the animations up fairly quickly, but they are just some simple mesh animations. However, the frames got lost when the .dae file is being converted to a .pod via the Collada2POD utility. Tried searching on the web to get help, but couldn't find any. I even resorted to posting on their forum, the PowerVR forums for help. Did not get any reply though, even now.

Hence, I decided to try exporting the model directly into a .pod file. But in order to do that, I would have to work with either Autodesk 3D Studio Max, or Autodesk Maya. Plug-ins are only provided for these 2 modeling tools for exporting to a .pod file. Decided to make use of 3D Studio Max since one of our colleagues have the license for that.

Since 3D Studio Max can only be run on either a Windows or a Linux platform, I had to work with Bernard. He is also the one that has the license for the software. Talked to him for a bit and he allowed me to use the Windows PC at his desk, since he would be going for a course for 2 days. It was really nice of him.

But after that, Kevin ran bootcamp on the MacBook Pro and there is Windows installed. So instead of going over to Bernard's desk, I decided to work on the MacBook Pro instead. I got the trial version of 3D Studio Max installed and played around with it. I feel like I am a graphics designer when working with these modeling tools! It’s a whole new thing to me, and I had to look for tutorials to learn the various different functionalities in the modeling tool. Hence, it is another valuable learning process for me.

I worked with Gim Han as well so as to get the heart mesh from him. He sent me 3 different models that have different vertex sets. I managed to get the vertex animations up fairly quickly. We were using a technique called morphing to come up with the animations.

The problem came just when we thought that the animations were complete. When I tried exporting the model into a .pod file, the animations were missing. Then it came back to me. POD files do not support vertex animations. We had a discussion with Kevin, and decided that we should move on to the other components of our project first. While working on the other components, we would have to read up on other techniques that we could use to come up with the animations.

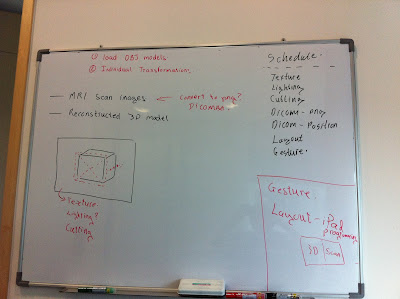

Hence, on Friday, we watched the podcast lectures to find out more on the UI controls for the iPad, namely the UISplitViewController and the UIPopoverView. I played around with these controls, and we discussed on our actual UI layout for the iPad application. Kevin, Zac and I gave our suggestions as to how the interface should look. My experience gained from working on my Major Project, which is also a medical application, helped quite a bit, since I was the one working on the UI then. We managed to derive at one that would give the best user experience.

I managed to get the UISplitViewController working, but there is still a problem when the interface orientation changes to landscape on the iPad. Somehow, the EAGLView cannot be seen at all, even though it is still there. Then we realized that it has got something to do with the animations when we draw the view. If the animation is stopped before the rotation, we could see the view clearly. However, the moment we turn the animation back on, it disappears from our sight once again.

Spent quite some time debugging, but still could not solve the problem. We would have to work on this next week.

I am glad that in TP, we get mant chances to work with others for our assignments. With this experience, I was able to work better with my colleagues whenever I needed help from them.

Friday, November 26, 2010

26 November 2010

http://www.imgtec.com/powervr/insider/powervr-collada2pod.asp

Managed to get some sample .dae files to test out the conversion, and this website provides some good models:

Once I got the conversion done, I got it tested out with PVRShaman. This utility allows me to import the .pod files that I have and view it. I can even do things like rotation, panning, etc. Changing display to wireframe can be done too. So with that, I'm actually able to see that the .dae file is converted to .pod nicely. Here's the utility:

http://www.imgtec.com/powervr/insider/powervr-pvrshaman.asp

Many 3D modeling tools out there are able to export the models into .dae files. Now the question is, am I able to use Blender to export the models into .pod files directly? If that can be done, we can easily skip the step for conversion. Did quite a lot of research, and realized that most people out there do the conversion using this method.

I then looked into the requirements for having animations in .pod files. Did some analysis on the .dae files that I have downloaded, and found out that the animations are actually inside the .pod file itself. After going through some forums, many users are saying that they create animations by capturing the frames. Once they got the animations up, they could just easily export the 3D model into a .dae file, which can then be converted to a .pod file. There, we would then have our animations.

Thursday, November 25, 2010

25 November 2010

Wednesday, November 24, 2010

24 November 2010

Tuesday, November 23, 2010

23 November 2010

Monday, November 22, 2010

22 November 2010

Although we have understood what was going on, we still could not come up with a proper solution. The center of the object does not follow our touch at all.

Later in the day, Kevin came in and brought up his solution. We tried it out, but there were still some minor problems. Gonna work out those solutions with Zac tomorrow.

Friday, November 19, 2010

19 November 2010

*edit* translation was not working well. Had to tweak it here and there. Spent some time trying to understand what happens when the model moves in the negative z-axis. Somehow got the formula up, but still have to try it out on Monday to see if it works.

Thursday, November 18, 2010

18 November 2010

Moved on to make use of pinch gesture for scaling, and managed to get it up. Still got some tweaking to do tomorrow though. Got to watch the podcast lecture first.

With that done, Zac and I then moved on to research on how to interact with individual objects. We found 2 different approaches. One of which is to make use of radius, the other is to make use of colors. After weighing the pros and cons, we decided to go with colors first. Got things working out nicely at the end of the day :) we're able to do individual object rotations with multiple objects loaded onto the view.

Tuesday, November 16, 2010

16 November 2010

Monday, November 15, 2010

15 November 2010

I then moved on to find out how to do simultaneous rotations on both the x and y axes. From there, I looked into matrices as they are the ones controlling the model's transformations. I had a little knowledge on matrices as I took additional maths in secondary school, but I was not very good at it. Did some research by reading through the explanations on iphonedevelopment.blogspot.com blog and the OpenGL ES redbook. Got to understand matrices a bit more, and managed to improve the rotations. There is still some faults here and there though. Have to look into matrices more tomorrow!

Sunday, November 14, 2010

12 November 2010

We realized that the normalized device coordinates always ranges from -1.0 to 1.0 on both the x and y axes. Hence, during the translation of window coordinates to normalized device coordinates, I have to scale the values down. With this, I was able to translate the positions properly.

The next thing that I am trying to solve is the problem with perspective. If the zNear and zFar values are not in the positive range, we would not be able to see any perspective. If we have perspective, the translation of coordinates would not be displayed in the way that we want it to be. Hence, I would have to spend more time trying to understand how this works on Monday.

Spent some time looking into swipe gestures as well. Earlier this week, I was only able to do swiping in one direction, left to right. I found out that we are actually able to swipe up, down, left and right. Now the next thing that I want to look into is if swipe gestures would work when I have pan gestures added to the view. These gestures does not seem to work well on the simulator. I might probably request to try this out on a real device to see if it's a limitation on the simulator.

Thursday, November 11, 2010

11 November 2010

I then decided to revert back to what the template used to create the glFrustumf, and the cube was drawn nicely. However, the coordinate translation went haywire. Therefore, I suspect that my issue has got to do with the creation of the glFrustumf. Hence, I decided to do more research on this to gain a better understanding.

Kevin came in to check on us at the end of the day. I had the direction in mind, but once again, I failed to express what I have in mind properly, which caused confusion for Kevin and Zac. I probably went into too much details too. Kevin then told me to research on the following terms aside from just glFurstumf:

- glMatrixMode

- glViewPort

- glLoadIdentity

Gonna do some research back at home though Kevin says that I can get it done by Monday.

Wednesday, November 10, 2010

10 November 2010

Tuesday, November 9, 2010

09 November 2010

Monday, November 8, 2010

08 November 2010 - Start of OpenGL ES & Project

Thursday, November 4, 2010

04 November 2010 - Bonjour Completed! :D

Wednesday, November 3, 2010

03 November 2010 - Bonjour, multiple iOS sending data to OSX (listener)

Tuesday, November 2, 2010

02 November 2010

Monday, November 1, 2010

01 November 2010 - Bonjour Application, Verge of Completion?

Friday, October 29, 2010

29 October 2010 - More Bonjour!

28 October 2010 - Bonjour again

Wednesday, October 27, 2010

27 October 2010 - Bonjour

Kevin's right, we got to learn to express ourselves more. If we are unable to express ourselves well, we would tend to get the wrong message across, resulting in poor communications. The project could be directed onto another path because we failed to express ourselves well enough. Learnt this important lesson from Kevin today. Thanks Kevin :)

Managed to publish the NSNetService properly today. Gonna work on multiple interactions tomorrow. Hope that we can come up with something good :)

Tuesday, October 26, 2010

26 October 2010 - More on Touch

Watched the lecture on Bonjour protocol while waiting for Kevin to check on us. It's really cool! There are all sorts of things we can do with it. Really up to our imagination.

*edit* added some animations to the second application after Kevin came and looked at our work.